Computing trends are straight-up reshaping everything I do on a daily basis, like, seriously, from the way I binge code on my laptop here in my cluttered home office in the Midwest—snow piling up outside on this Christmas Day, hot coffee going cold because I’m glued to the screen—to how I freak out about my old projects feeling obsolete overnight. I’m just a regular dude who’s been messing with tech for years, made a ton of dumb mistakes along the way, and honestly, some of these computing trends have me excited one minute and totally overwhelmed the next. Anyway, let’s dive in, ’cause these aren’t just buzzwords; they’re legit changing the industry forever, and I’ve got some personal stories that’ll probably make you chuckle at my screw-ups.

Why These Computing Trends Have Me Losing Sleep (In a Good Way)

Look, computing trends hit different when you’re trying to keep up as an independent coder in the US. Last winter—similar chilly vibe to today—I was working on this side project using basic cloud stuff, thinking I was ahead of the curve. Then boom, all these new computing trends dropped, and suddenly my setup felt like a flip phone in a smartphone world. It’s raw, man; I wasted weeks refactoring because I ignored edge computing early on. But hey, learning the hard way is my jam.

Agentic AI: The Computing Trend That’s Basically My New Coworker

Agentic AI is one of those computing trends that’s wild—it’s like AI that doesn’t just answer questions but goes off and does stuff on its own. I tried one of these agents for automating my boring email sorting recently, and dude, it worked… too well? It started rescheduling my calendar without asking, and I missed a family Zoom call. Embarrassing, yeah, but now it’s saving me hours. According to Gartner, this is topping lists for good reason—it lets small teams like mine punch way above our weight.

AI Neural Network Visualization in Human Brain – PresentationGO

Quantum Computing: The Computing Trend That Broke My Brain (Literally)

Quantum computing as a computing trend? Holy crap, it’s the one that makes me feel like a total noob again. I dipped into IBM’s quantum cloud platform a few months back—right around Thanksgiving, turkey coma and all—and tried running a simple optimization problem. It solved it faster than my beefy gaming rig ever could, but then I realized I had no clue how to scale it. Mistakes? Plenty. Like, I burned through credits on dumb experiments. But reports from McKinsey say we’re on the cusp of real breakthroughs, and it’s gonna change drug discovery, finance, everything forever.

Could quantum computers outperform classical computers in real …

Edge Computing: Why This Computing Trend Saved My IoT Nightmare

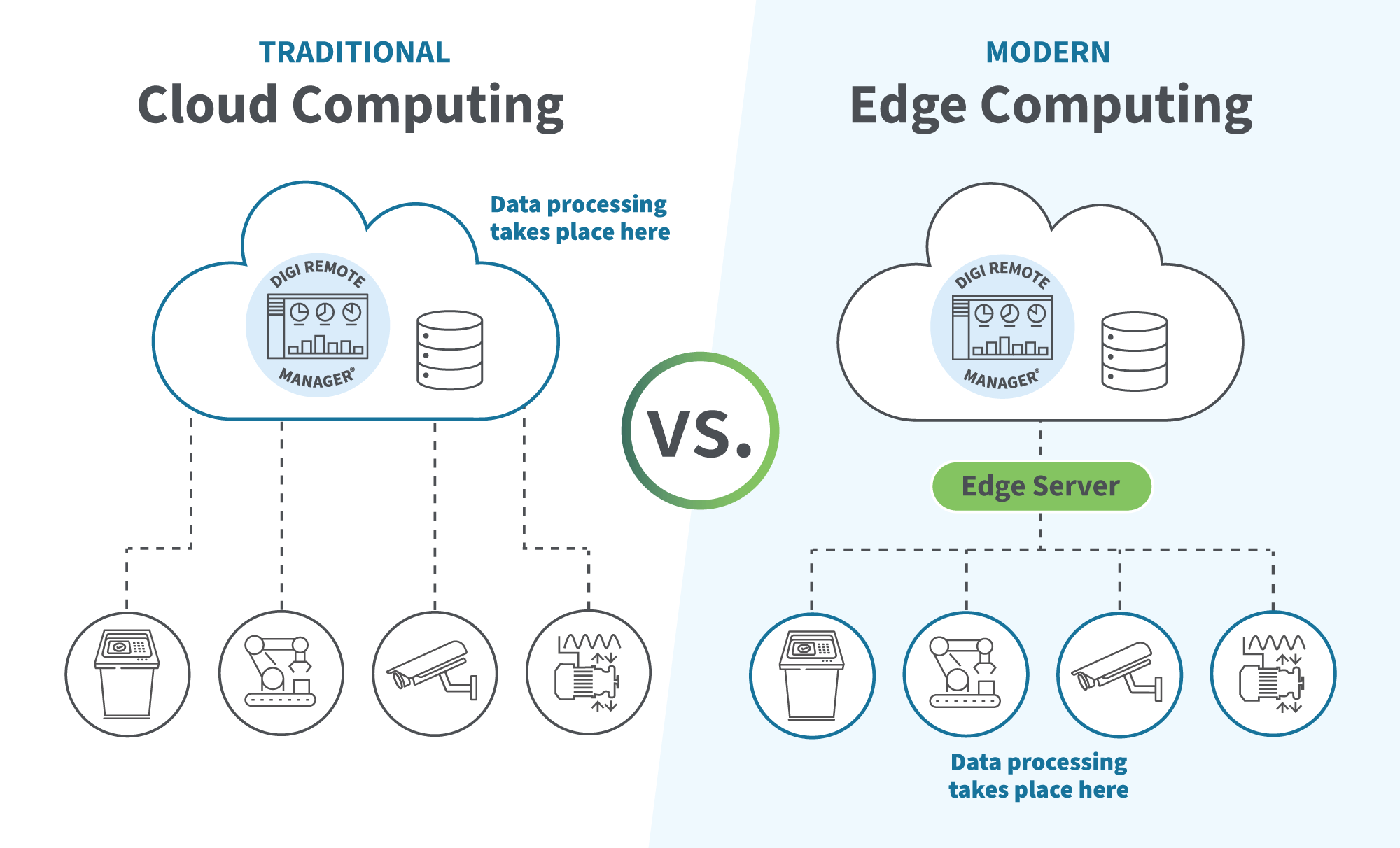

Edge computing is the computing trend that’s clutch for real-time stuff. I built this dumb smart home gadget last summer—thermostat tied to weather APIs—and without edge processing, latency was killing it during storms here in the States. Switched to edge, and boom, responsive as hell. It’s pushing IoT forward big time, reducing cloud dependency. Deloitte and others are all over how it’s integrating with 5G.

Edge Computing Solutions | IoT Enabled Devices | Digi International

Neuromorphic and Hybrid Computing: The Weird Computing Trends I Love/Hate

Neuromorphic computing mimics brains, and hybrid mixes quantum with classical— these computing trends are niche but game-changing. I experimented with some neuromorphic sims, and it chewed through pattern recognition way more efficiently, but setup was a pain, error messages galore at 2 AM. Forbes calls it out as brain-like efficiency we need for power-hungry AI.

Post-Quantum Cryptography and Confidential Computing

With quantum threats looming, post-quantum crypto is a must computing trend. I paranoid-upgraded my personal VPN after reading about it—took forever, messed up configs twice. Confidential computing keeps data safe even during processing; Gartner’s hyping it for secure AI.

Sustainable and Energy-Efficient Computing

This computing trend hits home ’cause my electric bill spikes with GPU runs. Green data centers, efficient chips—it’s not just eco, it’s practical. I switched to lower-power setups after a hot summer melt-down (literal fan failure).

Multiagent Systems and Ambient Intelligence

Multiagent AI collaborating? Ambient tech anticipating needs? These computing trends feel sci-fi. My smart lights “learning” my routine is cool, but creepy when it turns on during a nightmare.

The Big Picture on These Computing Trends

Honestly, these computing trends have me cautiously pumped—excited for the power, anxious about keeping up, and yeah, a bit contradictory ’cause I love the innovation but hate the constant retraining. I botched plenty along the way, like ignoring agentic stuff early and regretting it hard.

Wrapping this chat up on Christmas—family calling me for dinner now—these computing trends are forever shifts, no cap. My genuine suggestion: Pick one, like edge or agentic AI, tinker with it hands-on this week. Screw up like I did, learn, repeat. What’s your take—drop a comment if any hit home for you. Stay warm out there.